There are many vector databases available, but most of them either require Docker or involve configurations that we don’t fully understand.

I’ve had experience with MongoDB, so when I heard that MongoDB Atlas made it easy to create vector databases, it caught my attention.

As a PHP developer familiar with MongoDB’s drivers, I decided to give it a try.

Here’s a step-by-step guide for using OpenAI to create embeddings and save them in MongoDB, followed by a method to search those embeddings using a query:

- Create a free MongoDB Atlas account.

- Create a database using the interface.

- Create a collection to store the vectors.

- Use the OpenAI API to generate embeddings.

- Search the MongoDB collection using natural language.

What are Embeddings

Before explaining all the steps, we will need first to understand what are embeddings.

Embeddings are numerical representations of real-world objects that machine learning (ML) and artificial intelligence (AI) systems use to understand complex knowledge domains like humans do.

Embeddings are representations of data, such as text, images, and audio, designed for use by artificial intelligence systems.

After we convert this data to those embeddings, which are vectors of x number of positions, we can correlate similarity.

Create MongoDB Atlas account

By using the link you can register in MongoDB Atlas account.

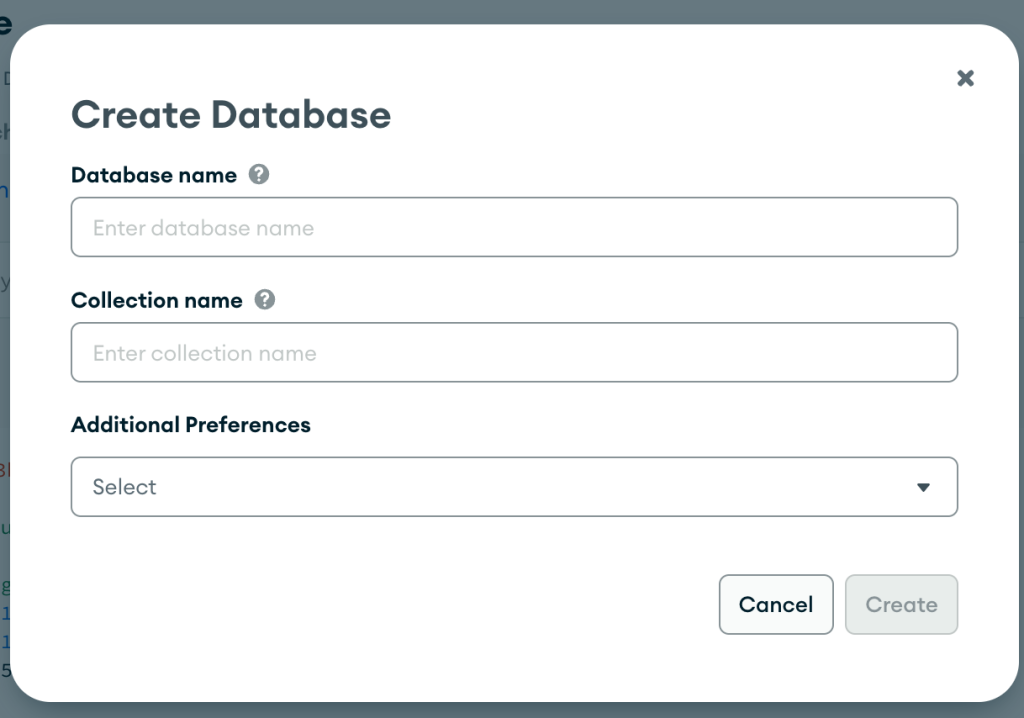

Create a database using the interface

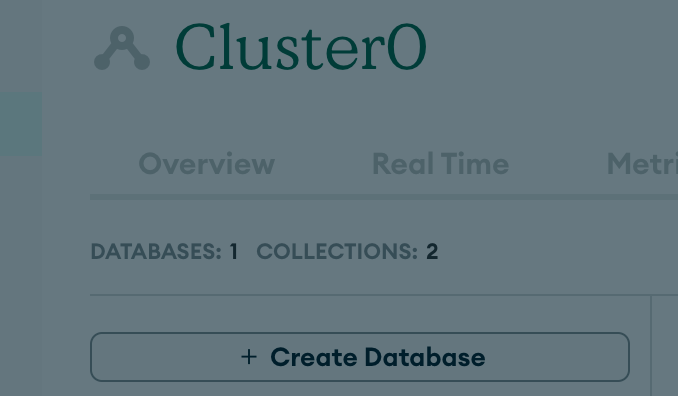

When you login in your MongoDB Atlas dashboard, you access to your cluster and create a new database:

Create a collection where we are going to save our vectors

A collection is like a table in a relational database. We will need to create a collection to save our embeddings.

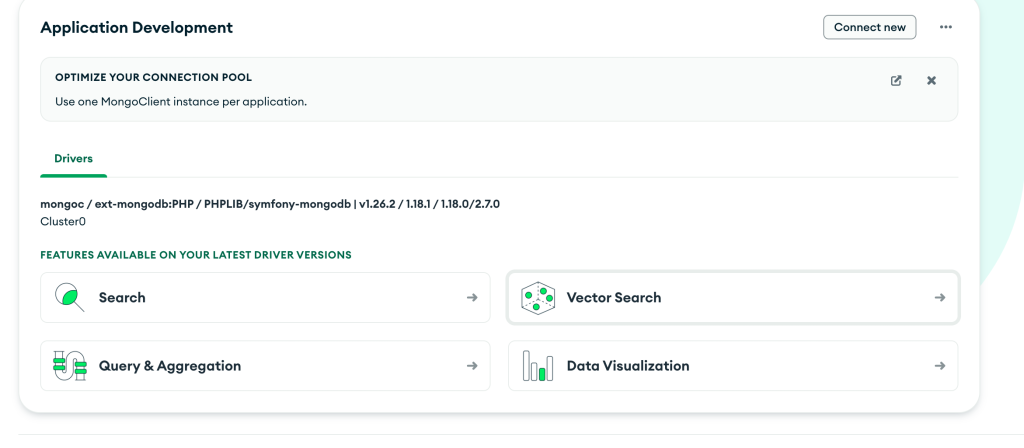

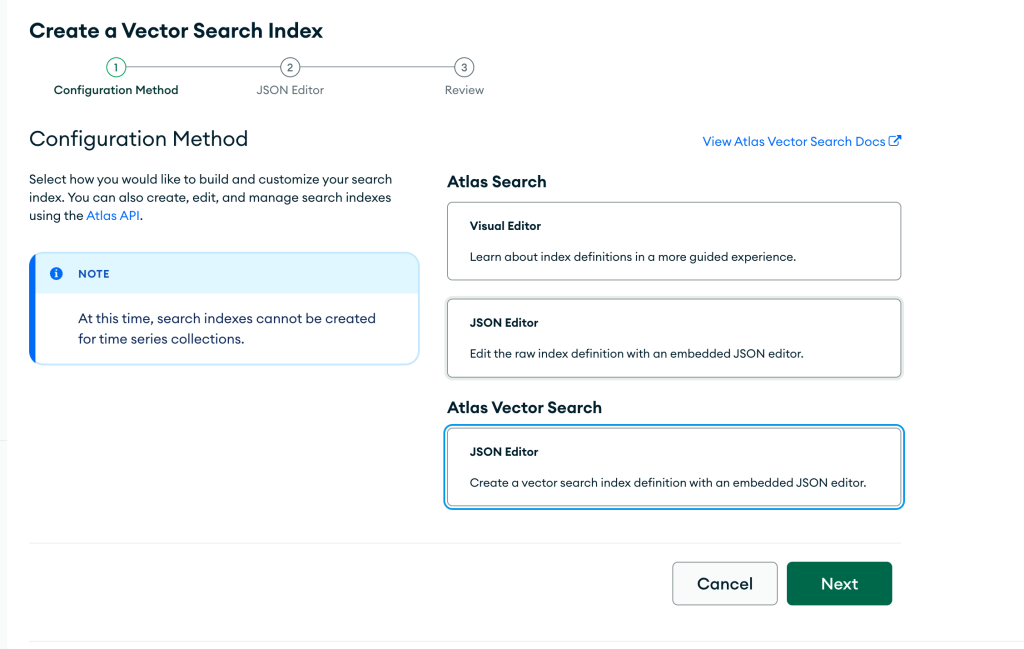

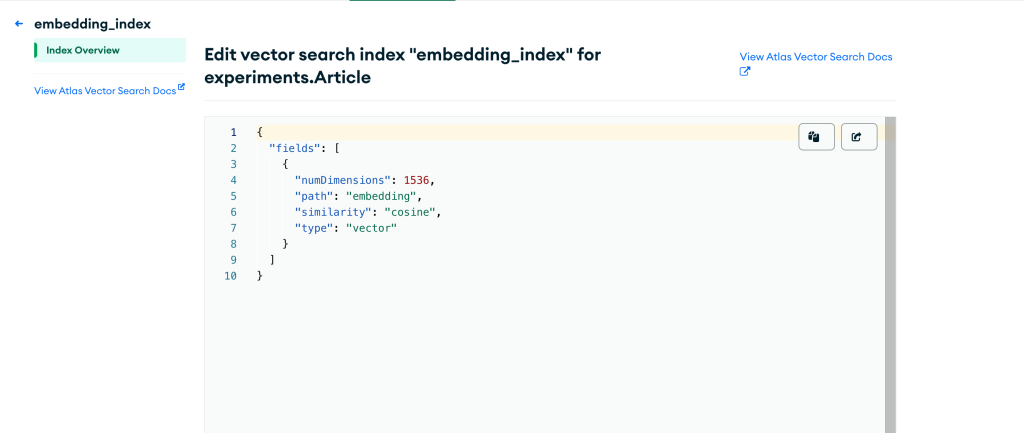

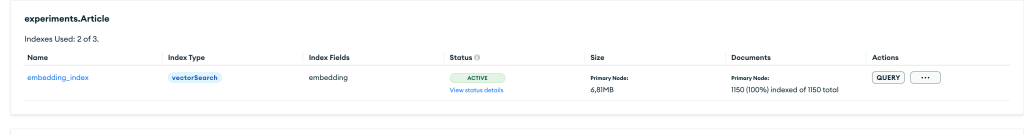

After you create the collection, you should create a special index called vector index:

In your dashboard, you will see the option “VectorSearch”

Select the option “JSON Editor”

You will then have to create an index and need to know the number of vector positions.

We are going to use openai small model: text-embedding-3-small for this. According to their documentation:

By default, the length of the embedding vector will be 1536 for

https://platform.openai.com/docs/guides/embeddings/what-are-embeddingstext-embedding-3-smallor 3072 fortext-embedding-3-large. You can reduce the dimensions of the embedding by passing in the dimensions parameter without the embedding losing its concept-representing properties. We go into more detail on embedding dimensions in the embedding use case section.

We will use the “cosine” similarity by default.

Use OpenAI API to generate embeddings

In PHP, you can just require the client for openai:composer require openai-php/client

$response = $openAiClient->embeddings()->create([

'model' => 'text-embedding-3-small',

'input' => $text,

]);Where $text is the text you want to create the embedding.

You can save it directly in your MongoDB collection

$mongoClient = new MongoDB\Client($mongoDbUri);

$collection = $mongoClient->selectCollection($databaseName, $collectionName);

$collection->insertOne([

'text' => $text,

'embedding' => $response->embeddings[0]->embedding

]);Now it is possible to query MongoDB using your natural to get a similar text inside your collection.

Search the MongoDB collection using natural language.

Searching using vectors is very straightforward because we already created the index. We can search by using the following query:

$search = 'How to create an AI Business';

$client = OpenAI::client($openAiKey);

$response = $client->embeddings()->create([

'model' => 'text-embedding-3-small',

'input' => $search,

]);

$query = [

['$vectorSearch' => [

'index' => 'vector_index',

'path' => 'embedding',

'queryVector' => $response->embeddings[0]->embedding,

'numCandidates' => 3,

'limit' => 3

]]

];

// Execute the search query$result = $collection->aggregate($query);foreach ($result as $document) {

// ask chatgpt to generate a summary

$response = $client->chat()->create([

'model' => 'gpt-3.5-turbo',

'messages' => [

['role' => 'assistant', 'content' => 'Generate a summary of this article'],

['role' => 'user', 'content' => $document['text']

],

]);

echo 'Summary: ' . $response->choices[0]->message->content . PHP_EOL;}

After doing the query, we can even ask chatgpt to make a summary of the text that was fetched.

Leave a Reply